Agent-Orchestrated Code Review

A proof-of-concept that demonstrates how multiple AI agents — orchestrated by a central coordinator — can collaborate to perform intelligent, automated code reviews. The demo explores agent selection, role specialization (coder, reviewer, tester, security auditor), and orchestration using an AI Foundry-style platform for end-to-end code quality automation.

Technology Stack

Agent-Orchestrated Code Review

Case Study: Multi-Agent Orchestration for Automated Code Review

This proof-of-concept (POC) demonstrates how an orchestrator agent coordinates several specialized AI agents to perform thorough, automated code reviews. The approach emphasizes role specialization (Reviewer, Coder, Security Auditor, Tester), orchestration logic (task routing, retries, aggregation), and human-in-the-loop checkpoints for high-risk or ambiguous findings.

The Challenge

- Manual code reviews are time-consuming and inconsistent across reviewers.

- Scaling code review for many pull requests requires either large reviewer headcount or slower time-to-merge.

- Specialized checks (security, performance, style, architecture) need different expertise and toolsets.

- Integrating LLM outputs reliably into CI/CD while maintaining traceability and auditability is nontrivial.

The Agent-Orchestrated Solution

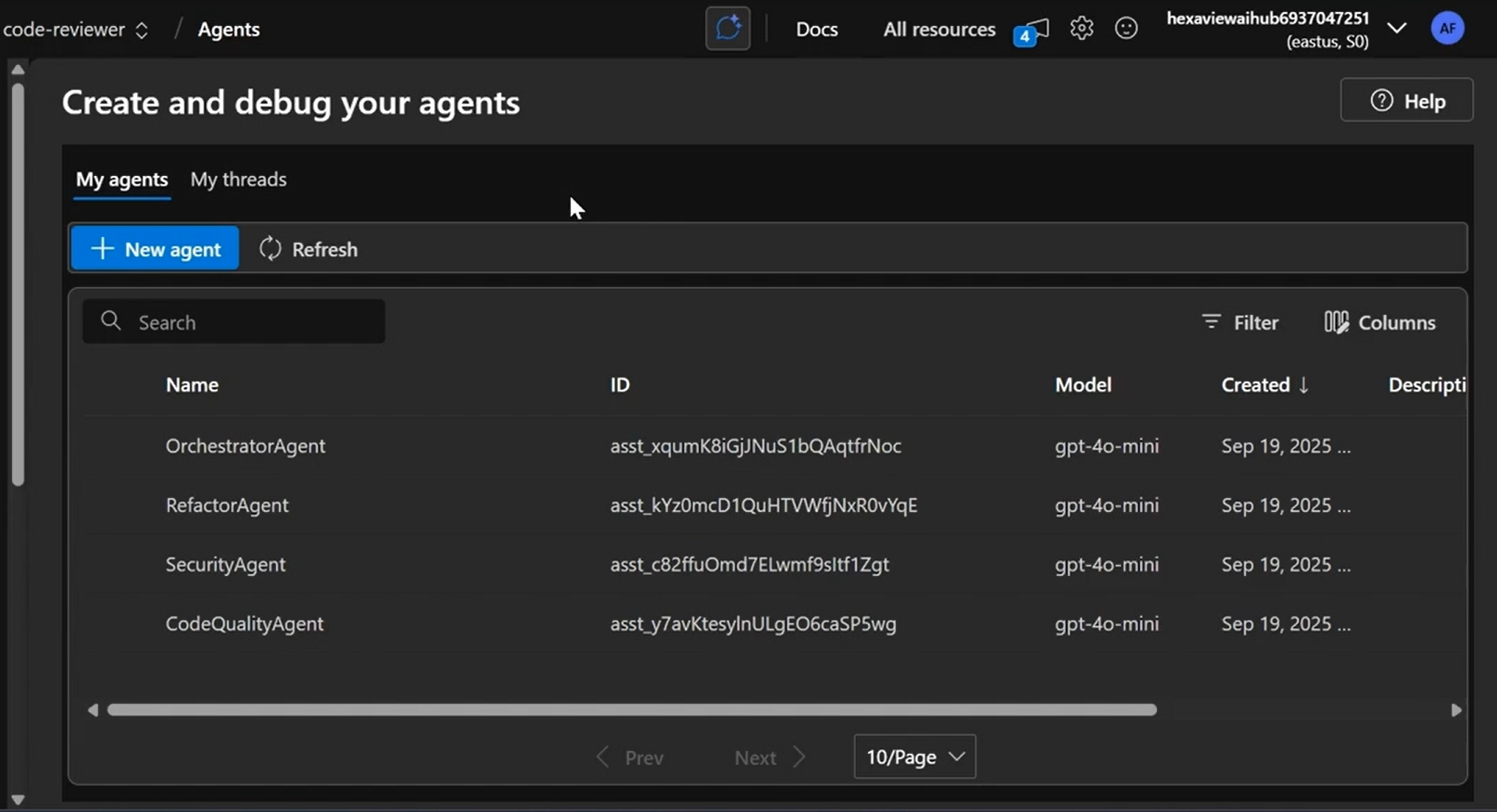

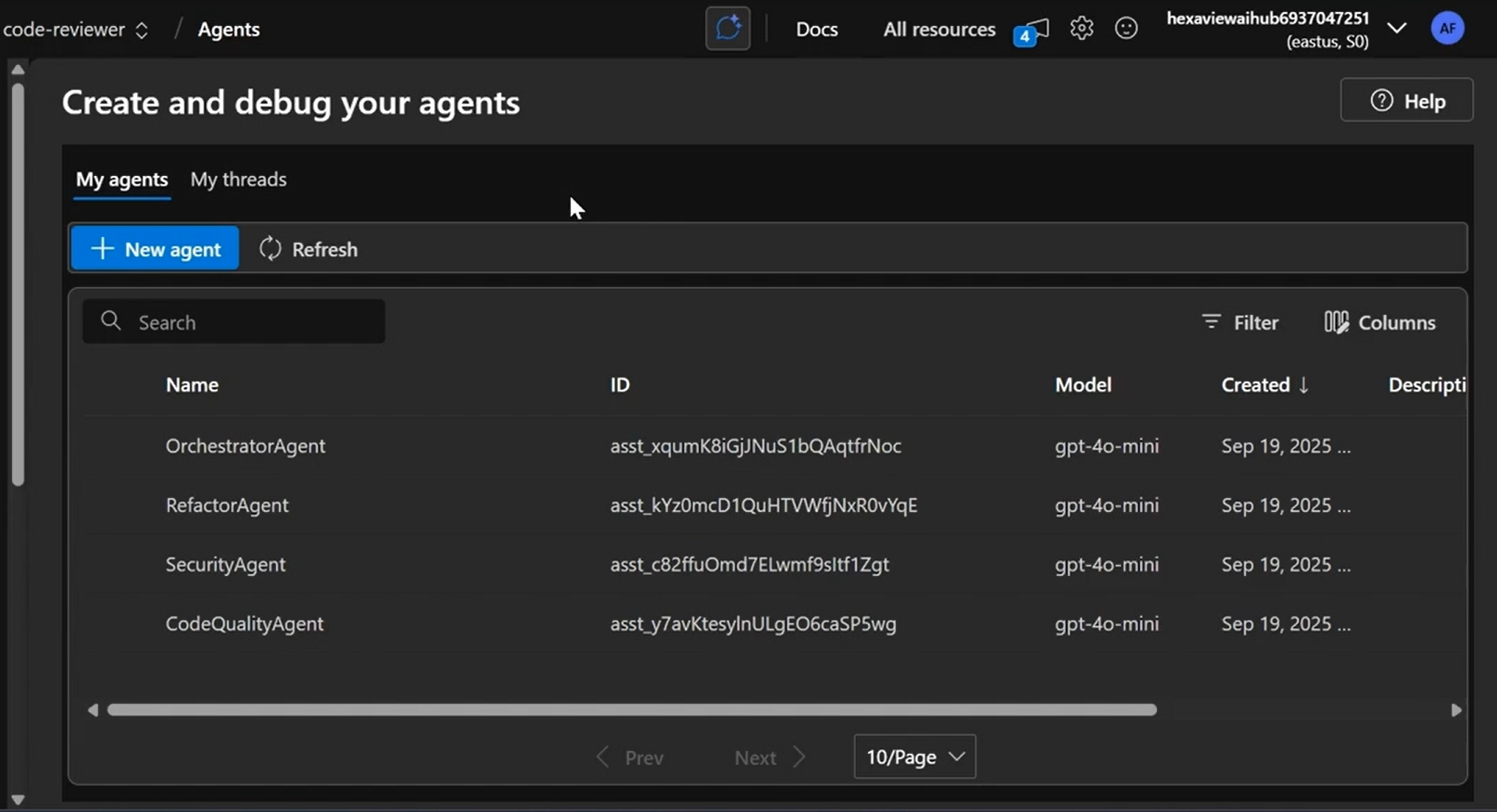

- Orchestrator Agent: an agent (or controller) that inspects incoming PRs, decides which specialist agents are required, and coordinates work distribution, retries, and result aggregation.

- Reviewer Agent(s): LLM-based reviewers that produce human-readable review comments, detect bugs, suggest refactors, and propose test cases.

- Security Auditor Agent: integrates static analysis and SAST tools, combines tool output with LLM reasoning for prioritized security findings.

- Tester Agent: auto-generates unit/integration tests and runs test suites, reporting coverage and flaky test signals.

- Coder / Fixer Agent: optionally creates patch suggestions or PR updates to address low-risk issues automatically (with human approval gates).

- Human-in-the-loop: gating mechanisms where maintainers approve high-impact changes and verify model suggestions.

The Journey: How the POC Operates (high-level workflow)

- PR ingestion: Orchestrator ingests a pull request or code snapshot.

- Intent & scope detection: Orchestrator classifies intent (bugfix, feature, refactor) and chooses which agents to invoke.

- Parallel agent execution: Reviewer, Security Auditor, and Tester agents run in parallel, each producing structured output (findings, severity, suggested fixes, tests).

- Aggregation & ranking: Orchestrator consolidates outputs, ranks findings by risk/confidence, and prepares a synthesized review report.

- Human review & action: Maintainer reviews the aggregated report; fixes either applied manually or via the Coder Agent behind a gated approval.

- CI/CD integration: Findings are reflected in the PR checks (pass/fail/warnings), and auto-generated tests run in the pipeline.

Key Features Demonstrated

- Agent specialization: clear separation of concerns between reviewer, security, and test agents.

- Orchestration & routing: dynamic selection of agents per-PR based on intent/scope.

- Patch generation: low-risk automated patch proposals (subject to human approval).

- Explainability: agents produce rationale and traceable outputs to support maintainers.

- CI/CD & policy integration: checks mapped to pipeline gates and compliance rules.

- Replayability / audit logs: each agent’s decisions are recorded for audits and continuous improvement.

Maintenance & Governance

- Human-in-the-loop checkpoints for safety and compliance.

- Policy rules determine which agents may auto-commit vs. require approval.

- Monitoring of agent performance (false-positive rate, suggested-fix acceptance rate).

- Model updates use canary evaluation and rollbacks to avoid regressions.

KPIs & Expected Outcomes (illustrative)

-

Reduced mean time to review (MTTR) for small PRs (example: 30–60% faster for low-risk PRs) — depends on approval policy.

-

Increased review consistency and coverage for security and style checks.

-

Fewer trivial review comments needing developer time (automated fixes accepted for low-risk issues).

-

Measurable audit trail: 100% of automated suggestions recorded with rationale for compliance.

Implementation Options

Cloud POC / SaaS Approach

- Deploy the orchestrator and agents on managed AI services (e.g., Azure AI Foundry or equivalent).

- Integrate with GitHub/GitLab via webhooks for PR ingestion.

- Use managed secrets, role-based access, and compliance features for enterprise readiness.

- Suitable for teams wanting quick POC and iterative tuning.

On-Premise / Hybrid Enterprise

- Run orchestration and sensitive agents behind corporate firewall for data sovereignty.

- Connect to internal SAST tools, on-prem CI, and proprietary test runners.

- Human-in-the-loop controls can be enforced via enterprise identity and approval flows.

Risks & Mitigations

- Overtrust in LLM fixes: require explicit approval for any change that affects production-critical code.

- False positives / noise: tune agent scoring thresholds and feedback loops (reject/accept signals).

- Security of model prompts & data: use encryption, RBAC, and private model endpoints for proprietary code.

- Regulatory/audit concerns: produce auditable decision logs for compliance.

Learnings & Recommendations

- Start with a narrow scope (e.g., style & unit-test generation) and iterate to add security and architectural review agents.

- Maintain human oversight and conservative auto-merge policies early in adoption.

- Instrument and measure acceptance rate of agent suggestions to continuously improve prompts and models.

- Treat orchestration as policy + routing logic — the orchestrator is a critical control plane for safety and governance.

Screenshots